Knowledge

Software Development

+0

How to Create Smooth Navigation Transitions with View Transitions API and React Router?

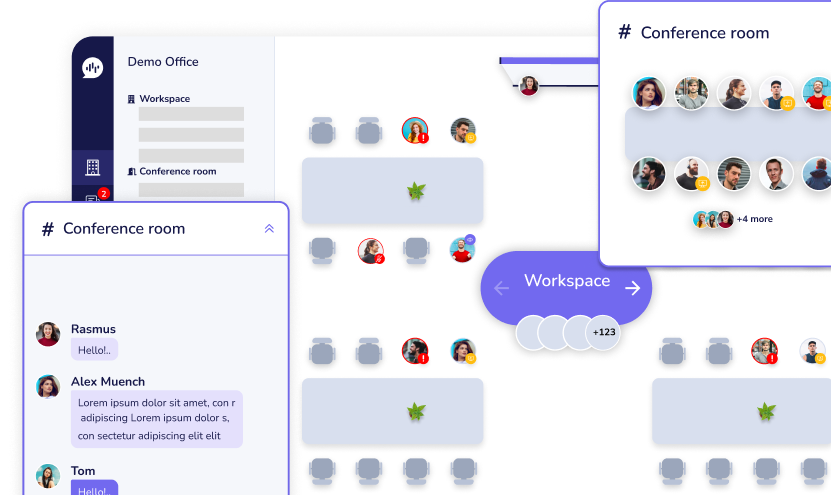

Normally, when users move between pages in a web app, they see a white flash or maybe a skeleton loader. That’s okay, but it doesn’t feel smooth. Try View Transitions API! Imagine you have a homepage showing a list of movie cards. When you click one, it takes you to a detail page with a big banner of the same movie. Right now, there’s no animation between these two screens, so the connection between them feels broken. With the View Transitions API, we can make that connection smoother. It creates animations between pages, helping users feel like they’re staying in the same app instead of jumping from one screen to another. Smooth and connected transition using View Transitions API In this blog, you’ll learn how to create these nice transitions using the View Transitions API and React Router v7. Basic Setup The easiest way to use view transitions is by adding the viewTransition prop to your React Router links: import { NavLink } from 'react-router'; <NavLink to='/movies/avengers-age-of-ultron' viewTransition> Avengers: Age of Ultron </NavLink> Only cross-fade animation without element linking It works — but it still feels a bit plain. The whole page fades, but nothing stands out or feels connected. Animating Specific Elements In the previous example, the entire page takes part in the transition. But sometimes, you want just one specific element — like an image — to animate smoothly from one page to another. Let’s say you want the movie image on the homepage to smoothly turn into the banner on the detail page. We can do that by giving both images the same view-transition-name. // app/routes/home.tsx export default function Home() { return ( <NavLink to='/movies/avengers-age-of-ultron' viewTransition> <img className='card-image' src='/assets/avengers-age-of-ultron.webp' alt='Avengers: Age of Ultron' /> <span>Avengers: Age of Ultron</span> </NavLink> ); } // app/routes/movie.tsx export default function Movie() { return ( <img className='movie-image' src='/assets/avengers-age-of-ultron.webp' alt='Avengers: Age of Ultron' /> ); } // app.css ... /* This class assign to the image of the movie card in the home page */ .card-image { view-transition-name: movie-image; } /* This class assign to the image of the movie in the movie details page */ .movie-image { view-transition-name: movie-image; } ... Now, when you click a movie card, the image will smoothly grow into the banner image on the next page. It feels much more connected and polished. Animating a single element with view-transition-name Handling Dynamic Data This works great for a single element, but what happens if you have a list of items, like multiple movies? If you assign the same view-transition-name to all items, the browser won’t know which one to animate. Each transition name must be unique per element — but hardcoding different class names for every item is not scalable, especially when the data is dynamic. Incorrect setup – Same view-transition-name used for all items in a list. The Solution: Assign view-transition-name during navigation Instead of setting the view-transition-name upfront, a more flexible approach is to add it dynamically when navigation starts — that is, when the user clicks a link. // app/routes/home.tsx export default function Home({ loaderData: movies }: Route.ComponentProps) { return ( <ul> {movies.map((movie) => ( <li key={movie.id}> <NavLink to={`/movies/${movie.id}`} viewTransition> <img className='card-image' src={movie.image} alt={movie.title} /> <span>{movie.title}</span> </NavLink> </li> ))} </ul> ); } // app/routes/movie.tsx export default function Movie({ loaderData: movie }: Route.ComponentProps) { return ( <img className='movie-image' src={movie.image} alt={movie.title} /> ); } // app.css ... /* Assign transition names to elements during navigation */ a.transitioning .card-image { view-transition-name: movie-image; } .movie-image { view-transition-name: movie-image; } ... Final output – Smooth transition with dynamic list items Here’s what happens: When a user clicks a link, React Router adds a transitioning class to it.That class tells the browser which image should animate.On the detail page, the image already has view-transition-name: movie-image, so it matches. This way, you can reuse the same CSS for all items without worrying about assigning unique class names ahead of time. You can explore the full source code below: Live DemoSource on GitHub Browser Support The View Transitions API is still relatively new, and browser support is limited: Chrome (from version 111)Edge (Chromium-based)Firefox & Safari: Not supported yet (as of May 2025) You should always check for support before using it in production. Conclusion The View Transitions API gives us a powerful tool to deliver smooth, native-feeling page transitions in our web apps. By combining it with React Router, you can: Enable basic transitions with minimal setupAnimate specific elements using view-transition-nameHandle dynamic content gracefully by assigning transition names at runtime Hope this guide helps you create more fluid and polished navigation experiences in your React projects!

08/07/2025

119