How to maximize virtual recruitment and training for app development

16/01/2023

76

Virtual work is a concept that has been introduced previously in the professional world, especially in the tech industry. Most companies are embracing remote and hybrid work to expand their employment pool and maximize efficiency and output. This was confirmed in a study by Cielo in 2020 – The Future of Work Survey. The study showed that 64% of recruiters are now more open to virtual work.

The popularity of remote work skyrocketed with the onset of the Covid-19 pandemic that took the world by storm, causing worldwide lockdowns and closure of non-essential businesses. To save their companies, CEOs adopted remote work. Although the pandemic is under control and it’s full steam ahead for all companies, many have retained remote or virtual employment.

However, remote, and physical work require different strategies to maximize their potential. Therefore, relying on the exact procedures for hiring, onboarding, and training workers would be detrimental.

Without face-to-face interaction with your employees, assessing their potential, hard and soft skills, professionalism, and whether they would be a good fit for your organization is challenging. The good news is that there are multiple ways you can replicate the physical experience of interviewing, hiring, onboarding, and training employees without wasting resources and time.

This article will discuss ways to maximize virtual recruitment and training for app development.

1. Plan virtual recruitment events

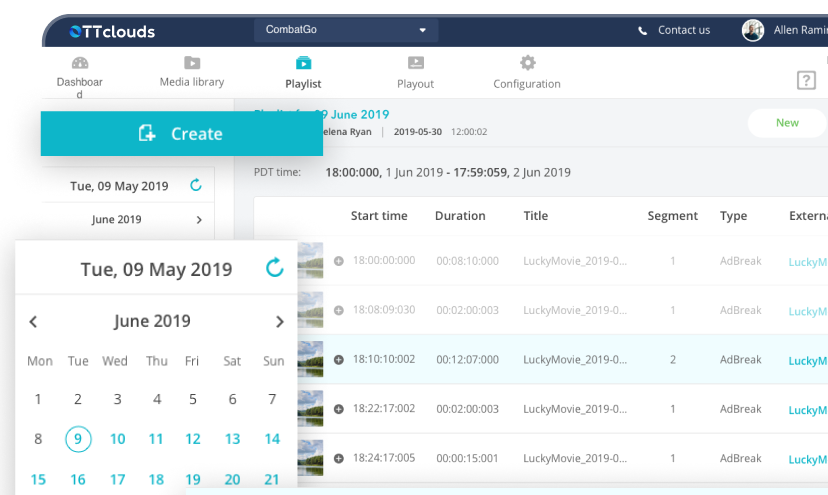

Recruitment events are an excellent opportunity to expand the talent pool, boost brand awareness, and establish connections with potential candidates. Job fairs are relatively easy to set up depending on the platform you choose and other third-party software services you include.

Indeed and Brazen are two of the most popular platforms you can use to organize job fairs. You can create an account, sign up, and create your virtual event in minutes. After creating your event, you can link to other apps like Zoom to allow for video conferencing. Although, some apps come with video conferencing tools embedded in them.

A day before the event, you can open the ‘lobby’ and let other interviewers in and prepare for the fair by customizing greetings and questions.

Brazen is a crowd favorite because of its ‘booth’ feature that allows companies to share information about their culture and environment that is readily available to anyone who registers for the fair. This information includes pictures, videos, short text, and links to other relevant pages.

Once applicants register for a fair, a landing page automatically collects their resumes, cover letters, and additional information.

At the end of job fairs, recruiters can take the next steps with candidates who stood out, such as direct messaging, emailing them or forwarding their information to concerned parties for further assessment.

2. Request video applications

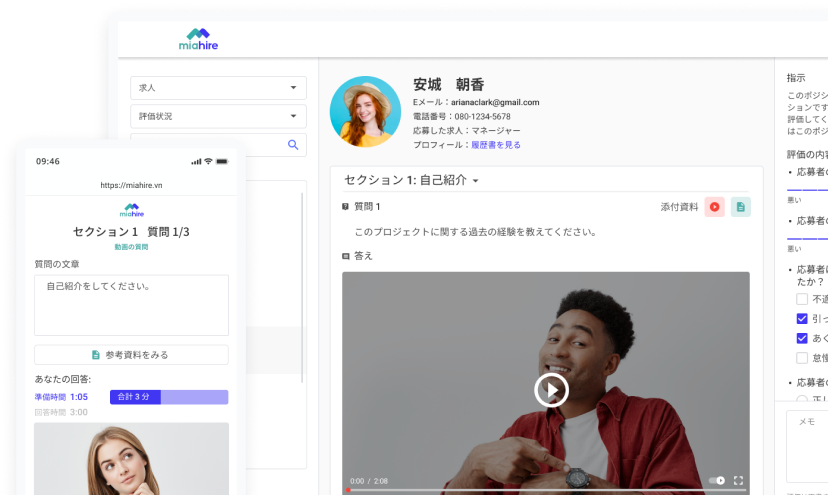

You can opt for video interviews if you want to get a real feel of a face-to-face interview without the awkward silences, tension and wait times. In addition, video interviews are a creative way to get an insight into the behavior of your potential employees.

For video interviews, you can send out questions they can answer in a self-recorded interview that they have to submit before a specific time. For instance, you can ask them to answer hypothetical questions directly related to the role they are applying for, their strengths, weaknesses, experience, qualifications, and achievements they are most proud of.

Video interviews can help you infer whether an applicant is confident, honest, ambitious, and skilled and whether they would fit your company well.

3. Grow your employer brand

Employers want to hire the crème de la crème in each field to build top-notch apps. However, this is easier said than done. If you want to hire the best talent, you have to ensure that they know about your company’s existence. If you are just starting out, this can be pretty challenging, especially when you want to recruit remotely.

One way to fix this is by increasing brand awareness. Developers need to know about your company, and they should want to work with you. You can start by working on your online presence—open social media accounts on all appropriate platforms like LinkedIn, Indeed, Twitter, Facebook, etc. In addition, populate your website with information about your company, such as the culture, vision, mission, portfolio, and events.

Add real pictures of employees at the workplace if you have a physical office, and even create virtual tours so applicants can get a sneak peek into your work environment.

Note: You can outsource your PR work to professionals if you want a highly effective brand presence.

Lastly, monitor reviews about your company on platforms like Glassdoor. You can learn much from an objective perspective on your company.

4. Make use of ATS

Application tracking software is a lifesaver for HR teams. Screening, interviewing, and hiring candidates can be an overwhelming experience, especially for jobs that can be done remotely, like app development. You will have more than the average number of applications if you open the role to any qualified applicant worldwide.

Application tracking software will help you cut the hiring process by days. ATS takes care of receiving resumes, cover letters, and contact information and storing it. ATS also handles screening candidates and rooting out unqualified candidates to reduce the load recruiters have to deal with personally.

In addition, it lets candidates see their application status and handles the scheduling of interviewers.

5. Keep lines of communication open at all times

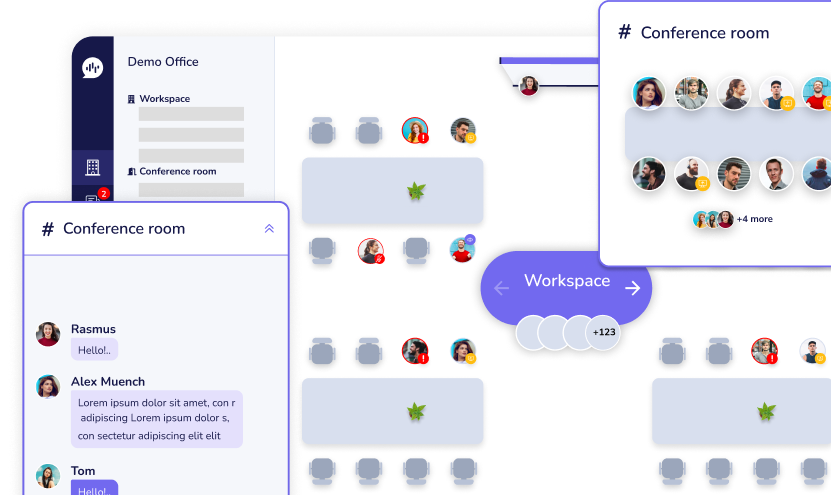

Although virtual hiring may be commonplace for your company, some applicants may be new to the entire process. It can feel like an isolating and scary process because they don’t have candidates the comfort and camaraderie of other candidates, they would have met at the interview venue. To get the most out of your remote hiring and onboarding process, you should ensure that your potential employees are as comfortable as possible.

You can achieve this by keeping lines of communication open and responsive at all times.

Below, I will share a few tips to make the process easier for candidates.

- Create a comprehensive document that will be shared with all applicants

To avoid confusion and chaos in hiring, you can create a simple document that answers most of the questions candidates may have. For instance, the video interview date, the hiring process, its stages, who will be conducting the interviews, how long the interviews will be, and what sample questions they can expect.

Candidates are always unsure about video interviews because each company conducts their interviews differently. In the PDF, let them know whether it will be a video interview or an audio one. If they must have their video on, state the dress code and whether they should join early and stay in the waiting room.

You can also let them know if they need a pen and notepad ready to take notes. By clearly outlining all this, you are guaranteed confident and prepared candidates.

- Hold AMA sessions

As we mentioned earlier, virtual interviews mean applicants don’t have the luxury of meeting other candidates and sharing extra information about the job or company. To remedy this, you can hold ‘Ask Me Anything’ sessions to let them ask any questions they may have about the organization and role they are applying for.

In addition, you can acquaint them with the hiring process, how long it will take and how they will know if they’ve made it to the next level.

AMA sessions are a great idea because they let candidates get familiar with the company and allow you to feel the candidates out. You will be able to see the candidates who are chatty, confident, enthusiastic, and confident by the frequency with which they ask questions and voice their concerns and issues.

Every employer wants a social candidate who isn’t afraid to stand out. AMA sessions are an opportunity to express that.

Bonus: You can record these sessions and save them for future purposes. Instead of holding them whenever you are hiring, you can share them with potential employees and save time.

6. Take shortlisted candidates for a trial run

If you want to be sure of your potential candidate’s hard skills before you commit to a full-time role, you can make the final stage of the interview process a simple paid job that they have to complete in a given amount of time.

Alternatively, it can be a simple live test during their interview to ensure they don’t have outside help. Finally, successful candidates can be hired and offered the role in a formal email.

7. Send out welcome packages

Who said virtual hiring has to be impersonal and cold? You can take the seriousness out of hiring by sending a surprise welcome SWAG bag to the hired candidate. It can include a brochure, branded products, a company laptop, you name it. If your pockets are deep, you can throw in a fun extra gift like a box of chocolates or a Starbucks gift card.

Wrapping up

If you borrow most of these tips, hiring and training remote workers can be the most seamless and rewarding experience.

Are you developing an app and need a hands-on remote team? Check out Supreme Tech. We are an outsourcing IT company focusing on mobile and web apps for English-speaking clients. We build the products using the agile methodology based on the client’s demands and maintain them.

Related Blog